If your web or mobile application involves user-generated content, you may encounter users who upload inappropriate photos or images to your application. These could be images which offend other users - adult content, violent photos, etc. - or images which cause your site to violate laws or regulations.

There are two ways to identify and remove such images: you can either require approval of each image before it is displayed to your users, or display images immediately after upload, and then quickly remove them from your site as soon as a moderator has found them to be inappropriate.

Another issue is who does the moderation. You can hire and train internal personnel to manually moderate your images. In Cloudinary's image management solution, we provide a manual moderation web interface and API to help do this efficiently. However, manual moderation is time consuming and expensive, and so we wanted to provide an additional option - automatic moderation of images as your users upload them.

Introducing Cloudinary's WebPurity add-on - seamless automatic image moderation

WebPurify is an online service for automatically moderating and filtering out inappropriate photos, preventing adult content or other kinds of offensive photos from creeping into your website. WebPurify performs quick and thorough human review of your user-uploaded images, behind the scenes.

WebPurify is an online service for automatically moderating and filtering out inappropriate photos, preventing adult content or other kinds of offensive photos from creeping into your website. WebPurify performs quick and thorough human review of your user-uploaded images, behind the scenes.

Cloudinary can manage your entire image lifecycle - upload and cloud storage of images, manipulating images to match your graphic design, and delivery of images to users over a fast CDN. With our new WebPurify Image Moderation add-on, you can also use Cloudinary to seamlessly perform automatic moderation of images uploaded by your users. Rejected images are automatically removed from your site, and you will be notified on every reject.

Enabling automatic image moderation by WebPurify within Cloudinary

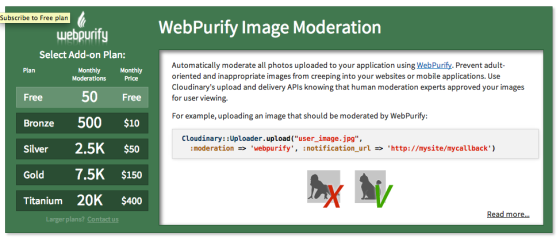

Sign up to a Cloudinary account if you don't have one already. Subscribe to the WebPurify add-on, free tier available.

Cloudinary provides an image upload API. Simply set the moderation parameter to webpurify when calling Cloudinary's upload API, as you can see in the following code samples.

The uploaded image is marked as 'pending moderation' and is automatically sent to WebPurify. Moderation is performed asynchronously after the upload call is completed. You can decide whether to display the image to your users immediately, or wait for moderation approval. If you decide not to wait, the image is delivered with short-term cache settings, allowing fast removal of rejected images.

Note - Cloudinary delivers images using a fast CDN (images are cached close to the geographical location of your users). While an image is pending moderation, the cache will be set to 'short-term', so that if it's rejected in moderation, it will be easy to roll back and remove it from all cache servers.

If you are already using Cloudinary for user-uploaded images, simply subscribe to the WebPurify add-on and set the moderation parameter as above. It's that easy to ensure all the images on your dynamic site are safe for users and comply with relevant laws.

Handling moderation results

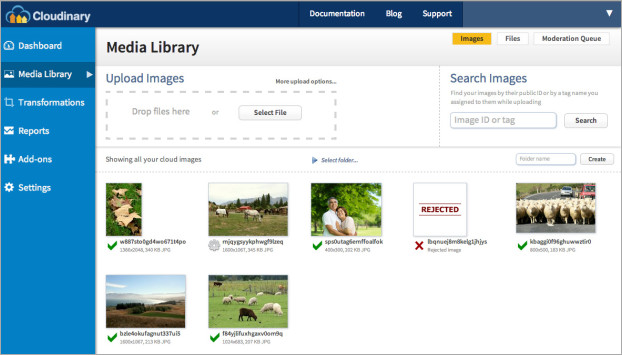

If an uploaded photo is approved by the WebPurify add-on, it is marked as 'approved' in your cloud-based media library managed by Cloudinary. Cloudinary updates the approved images, and all their transformed versions (Cloudinary can generate thumbnails, resized versions, and many other on-the-fly image manipulations) to long term caching. This optimizes delivery of the images to users around the world, now that there is no longer a need to “roll back” the image in case they are rejected.

When an uploaded photo is rejected by the WebPurify add-on, because it includes inappropriate content, the image is automatically deleted from your media library, all delivered versions of the rejected image expire quickly, and the rejected image is no longer displayed. The original rejected images are stored in the backup repository of your Cloudinary account, which you can still access through our web interface.

You may want to automatically update your application when an image is rejected by the WebPurify add-on. In order to do that, you can set the notification_url upload parameter to a URL on your site:

An HTTP callback request will be sent to your servers when the moderation process is completed (either approved or rejected). The request data includes the identifier and URLs of the moderated images.

{

"moderation_response": "rejected",

"moderation_status": "rejected",

"moderation_kind": "webpurify",

"moderation_updated_at": "2014-03-02T20:47:48Z",

"public_id": "nuzn4riqxhhzfyfljjxv",

"uploaded_at": "2014-03-02T20:47:47Z",

"version": 1393793267,

"url":

"https://res.cloudinary.com/demo/image/upload/v1393793267/nuzn4riqxhhzfyfljjxv.jpg",

"secure_url":

"https://res.cloudinary.com/demo/image/upload/v1393793267/nuzn4riqxhhzfyfljjxv.jpg",

"etag":"06778590d96907b60b5fa83795e7df3b",

"notification_type":"moderation"

} In addition, Cloudinary provides tools for further managing the image moderation flow. You can use Cloudinary's Admin API to list the queue of images pending moderation, and list rejected or approved images. Or you can view these lists using Cloudinary's Media Library web interface.

You can also use the API or web interface to alter the automatic moderation decision. You can browse rejected or approved images, and manually approve or reject them.

If you choose to approve a previously rejected image, the original version of the rejected image will be restored from backup. If you choose to reject a previously approved image, cache invalidation will be performed for the image so it is gradually erased from all the CDN cache servers.

For more details about the various moderation management APIs, take a look at our detailed documentation of the WebPurify image moderation add-on.

Summary

Websites enabling user-uploaded content have become very common. Most web and mobile apps skip moderation and take the chance that adult or inappropriate content might be uploaded and displayed to their users. Some apps support user reporting of problematic content, but this might be too late in certain scenarios, and the reporting feature can be abused as well.

Cloudinary takes care of the entire image lifecycle - uploading images to the cloud, dynamically manipulating them to match your graphic design, and delivering them to users optimized and cached via fast CDN. Now with the WebPurify add-on, Cloudinary can automatically and quickly filter out problematic photos. This makes the decision of whether to moderate uploaded photos a no-brainer. You can focus on building great applications, allow your users to freely upload content to your app and let Cloudinary, together with WebPurify, make sure your app displays high quality images completely safe for any audience.

You are welcome to share any question or feedback you have regarding the automatic image moderation solution described in this post.